· Paul Kronlund-Drouault · 9 min read

Grammar, Logic and Meaning

Recent developments in the training of Large Language Models (LLMs) have seen the rise of prover models, with deepseek-r1-prover being the pioneer in the domain, and OpenAI quickly following with o3-pro, and even the latest GPT-5-Thinking. These models are highly optimized for logic and proof through Chain-of-Thought (CoT) enabled Reinforcement Learning on hard math problems. While this approach has gathered good results, especially with smaller models developed by companies such as Z.ai or Moonshot AI, it has several fundamental flaws that decrease both accuracy and efficiency.

Under the current training paradigm, LLMs have to internalize three very different notions at the same time:

- Grammar

- Logic

- Meaning/Purpose/Creativity ?

This structure makes the whole pipeline very hard to debug. Failures on benchmarks and evaluations can come from either syntax errors, logical inconsistencies (what Wittgenstein would call unsinnig1), or outright limitations of the model’s “intelligence”.

Some companies, like .txt (dottxt.co) are developing regex-based sampling solutions to fix the grammar aspect, in order to improve tool integration and augment reliability. This has proven successful, and has shown good results with a significant decrease in compute cost in some applications, but it doesn’t go far enough.

For one, this approach only covers inference, and doesn’t integrate in the training pipeline. And secondly, it doesn’t solve the main problem of logic.

Proposition 7 (P7)

At Unsuspicious Industries, we want to go several steps further in the process. In this post, we are introducing Proposition 7, our constrained generation system [WIP] that unifies typed programming, proof building, reasoning and natural language2.

Philosophy

Philosophy is the practice of asking questions. Mathematics is the branch of philosophy in which we can know if our answers are true or false.

Intelligence is best understood as the capacity to ask good questions, in other words, to make interesting choices. “Interesting” is not the same as “true”. We call this ability taste: a coherent model of what is beautiful, and worth looking into.3

Some of what we find interesting is also absurd: we can call it mysticism4. Mysticism is cool, and we dedicate a lot of time to it, through art, music, literature, and even love, but it’s not something machines can/should do. Nevertheless, we cannot work without it, because finding beauty in a theorem needs the same taste system as finding beauty in a painting, or a poem.

Being good at mathematics is less about the mechanics of proof (we can trivially prove statements, it’s just that most statements are boring) and more about choosing what to prove, and when, to advance a goal.

To build intelligent machines, we should optimize for taste, but make sure to stay within the bounds of true statements.

Humans naturally think with vague language, often outrunning strict truth conditions, and it makes sense in a messy world.

”In the real world it is more important that a proposition be interesting than that it be true.”

— A.N. Whitehead

In formal reasoning, the tradeoff is different. Our thinking systems must balance taste with formality: know which questions should be asked while respecting which questions can be asked. With constrained generation we want to restrict search space to formally valid outputs in a given formal system, and use the broad priors of LLMs as approximations of taste, allowing them to pick which path to explore.

In such a setup, “hallucination” loses its meaning: truth is enforced by the formal layer, while open-ended association remains contained in latent representations, or alternative channels. By itself this is not enough, and freely pretrained models may stall, so Proposition 7 combines it with a training pipeline that applies a custom loss to formally verified outputs to shape intelligence, and an entropy-based sampling system.

System Overview

- Constraint Engine: unified grammar and typing/proof rules that produce only valid moves/ASTs within a formal system.

- Syntax independence: the formally constrained sampler is made to be syntax independent and work over runtime-specified context grammars with attached typing rules.

- Constrained pretrained LLM: ranks valid moves, expressed as tokens.

- Mode-switching sampler: the constraint engine can dynamically switch it’s formal core, based on parameters like entropy, in order to avoid getting stuck. We can combine free-form text reasoning with formally valid output, for better exploration possibilities.

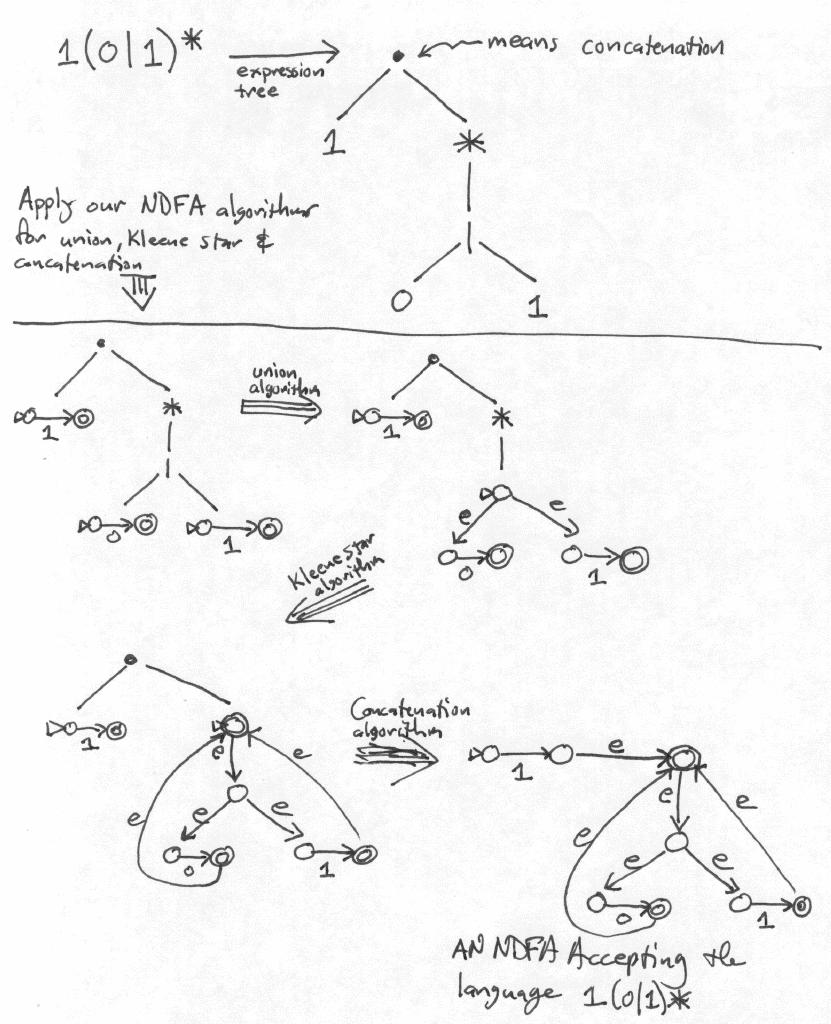

Constraint Engine

The engine is comprised of three parts:

- A meta-engine that inteprets rules to parse code into partial ASTs

- Rule files, written in a custom DSL inspired by the EBNF notation for grammars, and the natural deduction style for typing rules.

- A completion engine that unifies partial ASTs with rule conclusions to produce valid next tokens.

STLC Example:

Identifier ::= /[a-z][a-zA-Z0-9]*/

Variable(dec) ::= Identifier[x]

Lambda(lambda) ::= 'λ' Identifier[x] ':' Type[τ] '.' Expression[e]

Application(app) ::= Expression[e1] Expression[e2]

BaseType ::= Identifier[τ]

FunctionType ::= Type[τ1] '->' Type[τ2]

Type ::= BaseType | FunctionType | '(' Type ')'

Expression ::= Variable | Lambda | Application | '(' Expression ')'

x ∈ Γ

----------- (dec)

Γ ⊢ Γ(x)

Γ[x:τ1] ⊢ e : τ2

----------------------- (lambda)

Γ ⊢ τ1 → τ2

Γ ⊢ e1 : τ1 → τ2, Γ ⊢ e2 : τ1

-------------------------------- (app)

Γ ⊢ τ2

Meta Typing

The rule file itself is parsed and checked before being admitted, and “executed” as a constraint engine on the input.

Each rule undergoes a meta pass:

- Binding hygiene / scope sanity,

- Premise shape validation

- Well‑scoped metas resolved or flagged,

- Rule binding validity

Adding features can be done by supplying new constructor symbols alongside rules that relate them. If the meta checker accepts the file, the engine can generate with them without code changes. Reflection boundary: the system never executes object rules at meta time, it simulates them structurally to guarantee they cannot smuggle imperative effects (soundness guard). It’s some approximation of abstract interpretation. Notably, this means that the system must have persistent states, unlike CFG approaches like what’s done in Outlines, adding more complexity to the sampling system.

Sampling constraints can be dynamic, and most adapted to the problem you’re working with. This means we can easily switch from constraining in one language to another, dynamically.5 It also means that we can choose the best formal framework for the task at hand. We could even have algorithms that adapt the language to the problem, improving efficiency and expressiveness tradeoffs.

Generation loop:

- Parse input into a Partial AST, with open branches on right-completeable nodes.

- Unify with rule conclusions (up to declared conversion)

- Silently drop branch rules whose instantiated premises fail

- Emit admissible completions. Invalid states never materialize.

- Pass the the LLM to rank the valid moves (token sequences) according to learned taste.

A Paradox

- Constraints create freedom: removing correctness burden frees the model to explore style, and allows for more creative outputs, with mechanisms like temperature, or entropy sampling.

- Form enables expression: like meter in poetry, rules focus creativity6.

- Interesting-ness (or taste) resists definition but emerges from examples and use.

Architecture

Open Questions

- Exploration vs exploitation: scheduling diversity when all branches are already valid.

- Expressiveness vs decidability: managing search with intersections, unions, negation, refinements before adding heavier features (inductives, quantifiers).

- Entropy-driven mode switching: optimal triggers that surface genuine abstraction needs

These questions represent the core research challenges that would need to be addressed in developing P7 from proposition to working system.

Practical uses

- Practitioners: Use constraints to eliminate invalid states; devote model capacity to style and strategy.

- Decision-makers: Expect better efficiency (less wasted compute) and better interpretability and traceability of the process.

- Researchers: Treat interesting-ness as a learnable value function over valid moves; compare signals (structural, graph, human preference).

Implementation

A reference prototype for the P7 system is currently being developped, mainly by me, with collaboration and feedback from Niels Müdler @ ETHZ

The code is available freely on github/

References

Philosophy & Foundations of Mathematics

- Wittgenstein, L. (1921). Tractatus Logico-Philosophicus.

- Wittgenstein, L. (1953). Philosophical Investigations.

- Kant, I. (1790). Critique of Judgment.

Core Type Theory & Foundations

- Martin-Löf, P. (1984). Intuitionistic Type Theory.

- Girard, J.-Y. (1972). Interprétation fonctionnelle… (System F / cut elimination).

- Reynolds, J. C. (1974). Towards a theory of type structure. (Parametric polymorphism).

- Barendregt, H. (1992). Lambda calculi with types.

- Wadler, P. (2015). Propositions as Types.

Constrained Generation, Types & Synthesis

- Muendler, N., Antonov, N., & Vechev, M. (2025). Type-aware constraint generation for LLM code synthesis (ETH Zurich).

- Willard, B., & Louf, R. (2023). Efficient guided generation for large language models.

- Beurer-Kellner, L., Fischer, M., & Vechev, M. (2022). Prompting is Programming.

- Polikarpova, Kuraj, & Solar-Lezama (2016). Refinement type synthesis.

- Knuth, D. E. (1968). Semantics of context-free languages.

- Pierce, B. C., & Turner, D. N. (2000). Local type inference.

Notes

Footnotes

In Wittgenstein’s Tractatus Logico-Philosophicus, unsinnig (nonsensical) refers to propositions that violate logical grammar and are therefore meaningless, distinct from sinnlos (senseless) which refers to tautologies and contradictions that are logically well-formed but say nothing about the world. See propositions 4.461-4.4611. ↩

The project is named after the last proposition in the Tractatus: “Whereof one cannot speak, thereof one must be silent” (Wovon man nicht sprechen kann, darüber muß man schweigen). Our system aims to clearly delineate what can be formally expressed versus what belongs to the realm of the unsayable (mystical). ↩

As I was writing this essay, an Italian friend, studying philosophy in Padova was visiting me in Lyon and we’ve had many discussions about Kant’s third critique. In it, Kant describes aesthetic judgement (what I call here taste) as “a claim that [something] possesses the ‘form of finality’—that is, that it appears to have been designed with a purpose, even though it does not have any apparent practical function”. This idea of meaning transcending formal function (or truth) makes for a coherent model of intelligence. ↩

There is indeed the inexpressible. This shows itself; it is the mystical. (6.566) ↩

This approach draws inspiration from attribute grammars (Knuth, 1968) and bidirectional type checking (Pierce & Turner, 2000), but extends the concept to proof generation where syntactic and semantic validity are enforced simultaneously. It’s a bit unorthodox, but gives flexibility we wouldn’t have otherwise. ↩

I think we can find something interesting in “creative constraints”, that exist in many artistic traditions, like the formal restrictions of sonnets or George Perec’s La disparition (a book he wrote without using the letter “e”). I think it can be interesting to see how alignment differs within constrained environments. ↩