· Paul Kronlund-Drouault · 9 min read

A quick overview of RegEx

Regular Expressions (RegEx) are a very common tool in computer science, often used for search-and-replace operations, finding specific strings or patterns in large files, and validating user input (see the infamous email regex).

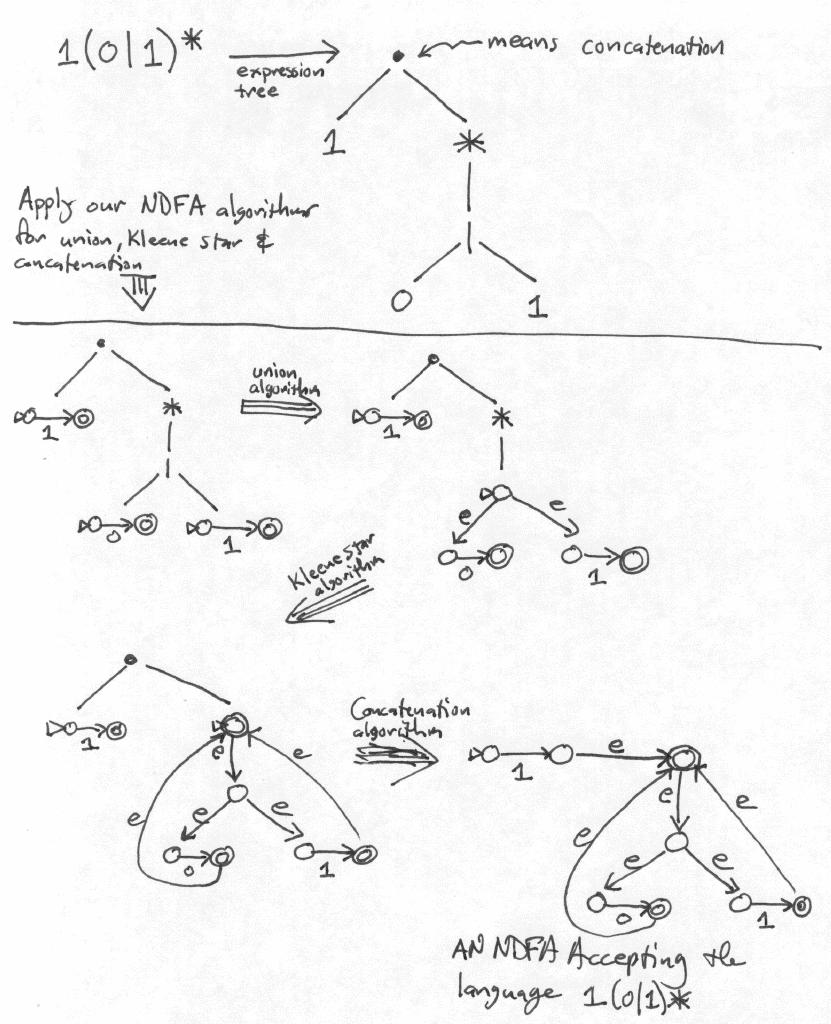

In introductory classes to theoretical computer science, we see regular expressions as an example application of automata theory, more precisely finite automata. The process of converting a RegEx into an automaton is called Thompson’s construction, and is behind grep’s unreal speed.

The problem at hand

When building Proposition 7, I was struggling with a very peculiar problem in program synthesis and input validation.

Correctness by complete-ability

The p7 expression parser is based on a very simple principle:

With an alphabet and a language, we can define a function

as follows:

Our goal here, for constrained generation, is to ensure that any prefix of a valid expression can be completed into a valid expression in and thus lead to a valid program of proof.

RegEx sub-problem

Since our language is defined by complex mechanisms, such as typing rules and recursive grammars, it must be handled by a Turing-complete model in the general case. However, a subset of this problem requires a generalized solution for RegEx.

Consider the following grammar as an example:

expr ::= /b(u|o|i)p/

term ::= expr ('and' expr)*

prog ::= term 'at' 'bap'

expressions between slashes (/<regex>/) are regexes

The program bip at bap is a trivial valid expression, as is bip and bop at bap. However, bip and is not a valid expression, but can be completed into one by adding another expr + at bap after the and. We can call it in .

Now, consider that we are working with a system that produces output byte by byte. How can we validate the expression bi or bip an? Both are prefixes of completeable expressions, but neither is a expression.

Formally, we could say that our grammar defines a language over an alphabet and that this alphabet is itself a language over the alphabet of bytes (or more generally corresponding to any vocabulary where and ).

Disambiguation of

can be decomposed as the union of all keywords in . Each element defines a language , where is either a singleton set (for fixed keywords) or a regular language (for patterns like identifiers)1:

For example, in our grammar:

- (a fixed keyword)

- (a regex pattern)

Each can be represented by a regular expression such that . Therefore:

Following this, we can define such that

Validation from to

To validate partial inputs over , we can define a function

as follows:

This function bridges the gap between the formal language’s alphabet and the model vocabulary, allowing us to validate all possible partial (complete-able) inputs.

in

Now that we have a function relating our model’s vocabulary to the formal language’s alphabet , we can define a function that directly relates the model’s vocabulary to the completeability in noted :

Let be a tokenization of input where are complete tokens and is the remaining partial input (noted if empty).

For disambiguation see2

This captures that we accept a partial input if either

- It fully tokenizes into element of and parses correctly in

- The partial token can be completed by some into a valid token such that and appending to the complete tokens yields an expression that is in .

Keeping with our example, bi and bip an are both in , as they can be completed into valid expressions in : bip and bip and that are themselves in . On the other hand, bip at bo is not in , because while it can be completed into the expression bip at bop, this expression is not in (it is even outright invalid).

Implementation

Focusing on the regex aspect of the program, we want to implement for the case where is defined by a regex . We cannot trivially do this by directly manipulating automata, but need a more general mechanism on the expressions themselves. In short, we need something that can tell us if a string is a valid prefix of a string in .

One approach is find the set of suffixes , and check if is non-empty. The completing suffix of is known as the quotient of with respect to , and is written . The expression that can produce this set of quotients is known as the Brzozowski derivative of with respect to , and is written .

We work with a standard regular-expression syntax over the alphabet to define :

- (empty language), (empty word)

- literals and character ranges

- union , concatenation and Kleene star

Let denote the language of regex , so that for some regex . The (left) quotient of a language by a character is

We also introduce (“nullable”) as the predicate that holds exactly when :

We can then define our Brzozowski derivative ( with ):

We can then extend the derivative to arbitrary strings by left-folding on characters (over ):

Equivalently, we apply recursively for ,

Correctness connects completion to nullability. For the keyword-recognizing regex (where ):

This is precisely the test we need for complete-ability in : given a partial input , compute and check :

cases evaluated in order for disambiguation

Correspondence to code

The Rust definition below implements and then folds it over a string :

fn char_derivative(&self, c: char) -> Regex {

use Regex::*;

match self {

Empty => Empty,

Epsilon => Empty,

Char(ch) => if *ch == c { Epsilon } else { Empty },

Range(start, end) => if *start <= c && c <= *end { Epsilon } else { Empty },

Union(a, b) => Union(Box::new((**a).char_derivative(c)), Box::new((**b).char_derivative(c))),

Concat(a, b) => {

let da = a.char_derivative(c);

if a.nullable() {

Union(

Box::new(Concat(Box::new(da), Box::new((**b).clone()))),

Box::new(b.char_derivative(c)),

)

} else {

Concat(Box::new(da), Box::new((**b).clone()))

}

}

Star(r) => Concat(Box::new((**r).char_derivative(c)), Box::new(Star(r.clone()))),

}

}

pub fn derivative(&self, s: &str) -> Regex {

s.chars().fold(self.clone(), |acc, c| acc.char_derivative(c))

}

In practice, one also applies algebraic simplifications after each construction (e.g., , , ) to control expression growth.

In the real implementation, we use the Brzozowski derivative not only to implement , but also as a policy for the sampling system in the constrained decoding core of Proposition 7.

Conclusion

In a real world implementaiton with grammar defined in EBNF-like format and an input over , we can implement as follows:

- Tokenize into tokens over .

- If tokenization succeeds and is fully tokenized, return

- If tokenization fails with remaning input , continue to step 2

- Compute where

- Build the set of possible next continuations in

- Check if such that where .

- If yes, return accept

- Otherwise, return reject

For the precise use case of constrained generation, and are passed to the sampling system and applied as filters on the model’s vocabulary to only allow tokens that can complete into a valid expression.

Obviously, this is only a small piece in the puzzle, and a lot of challenges remain with the implementation of on arbitrary, context-dependent, languages, with context and type dependencies. It’s still cool to see how basic concepts like this have deep implications and practical value in real-world systems.

Footnotes

For the purposes of this article, we assume tokenization follows the longest match principle: when multiple tokens could match a prefix of the input, the longest matching token is selected. This ensures deterministic tokenization and prevents ambiguity in the decomposition above. For instance, if both ‘a’ and ‘and’ are in , the input “and” tokenizes uniquely as the token ‘and’ rather than ‘a’ followed by remaining input “nd”. ↩

Since guarantees that some completion of exists, the second case checks whether at least one such completion (noted here ) validates the condition and yields a -completeable expression. For constrained sampling, finding any valid path forward suffices. ↩